Latest Version

Version

1.6

1.6

Update

December 06, 2024

December 06, 2024

Developer

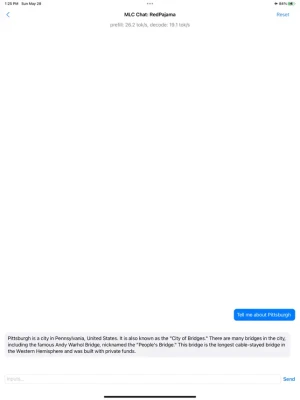

Chat with Open Language Models

Chat with Open Language Models

Categories

Productivity

Productivity

Platforms

iOS

iOS

File Size

1.8 GB

1.8 GB

Downloads

0

0

License

Free

Free

Report

Report a Problem

Report a Problem

More About MLC Chat

Large language models locally in phone

MLC Chat lets users chat with open language models locally on ipads and iphones. After a model is downloaded to the app, everything runs locally without server support, and it works without internet connections do not record any information.

Because the models run locally, it only works for the devices with sufficient VRAM depending on the models being used.

MLC Chat is part of open source project MLC LLM, with allows any language model to be deployed natively on a diverse set of hardware backends and native applications. MLC Chat is a runtime that runs different open model architectures on your phone. The app is intended for non-commercial purposes. It allows you to run open-language models downloaded from the internet. Each model can be subject to its respective licenses.

Because the models run locally, it only works for the devices with sufficient VRAM depending on the models being used.

MLC Chat is part of open source project MLC LLM, with allows any language model to be deployed natively on a diverse set of hardware backends and native applications. MLC Chat is a runtime that runs different open model architectures on your phone. The app is intended for non-commercial purposes. It allows you to run open-language models downloaded from the internet. Each model can be subject to its respective licenses.

Rate the App

Add Comment & Review

User Reviews

Based on 0 reviews

No reviews added yet.

Comments will not be approved to be posted if they are SPAM, abusive, off-topic, use profanity, contain a personal attack, or promote hate of any kind.

More »

Popular Apps

Solitaire Grand HarvestSupertreat - A Playtika Studio

InstagramInstagram

Free Fire MAX 5Garena International I

Blackout Bingo - Win Real CashLive Bingo for Real Cash Prize

FacebookMeta Platforms, Inc.

Play 21Skillz® Real Money Card Game

Solitaire Cube - Win Real CashClassic Klondike Card Game

WhatsApp MessengerWhatsApp LLC

Cricket LeagueMiniclip.com

5-Hand PokerSkillz® Real Money Card Game

More »

Editor's Choice

Blackout Slots: Skill ReelsReal Cash Prize Fun

Big Buck Hunter: MarksmanHunt Deer & Win Cash Prizes!

Dominoes Gold - Domino GamePlay Dominoes for Real Money

Pool Payday: 8 Ball Pool GamePlay Billiards For Real Cash

Strike! By BowleroBowl for Real Prizes!

5-Hand PokerSkillz® Real Money Card Game

Play 21Skillz® Real Money Card Game

21 Blitz - Blackjack for CashWin Real Money with Real Skill

Blackout Bingo - Win Real CashLive Bingo for Real Cash Prize

Solitaire Cube - Win Real CashClassic Klondike Card Game